Searching for E.T. and the Cure for Cancer: The Planetary Society Helps Trigger a Computing Revolution

搜寻外星人和癌症疗法:

行星协会助力掀起一场计算革命

来自The Planetary Report,2007年5月/6月

作者:Amir Alexander 和 Charlene Anderson

<资料来源:The Planetary Society>

Shown here is the The 26-meter (85-foot) radio telescope at Green Bank, West Virginia, used in the first SETI experiment -- Project Ozma. Credit: NRAO / AUI

我们不能拒绝这个机会:成为让所有公众真正能够为科学做出贡献且有机会找到改变世界之发现的实验的一部分。那正是加州大学伯克利分校的David Anderson和Dan Werthimer将该项目提交给行星协会并寻求我们的帮助来发起该项目时SETI@home所承诺的。凭借我们成员的大力支持,我们投身其中,而且之后有了将近六百万的参与者,由此SETI@home成为了科学计算历史上的里程碑。

我们支持SETI@home的首要原因是这个项目促进搜寻外星智能生命项目(SETI)的潜力,我们成立之时就密切地对行星协会进行援助。但有比那更有意义的事。SETI@home同样会开创一个新的计算模式,其中的任务包将会分发给联网的计算机,从而构建一个能够显著减少科学家在棘手计算上耗费资金与时间的虚拟超级计算机。

“副产品”的潜力—最初研究之后偶然的应用—它很显然来自于SETI@home的诞生,但是将潜力转变为现实从来都不能保证。只要有希望,我们就全力以赴。由于有SETI@home,那些希望已经奇迹般地实现了。

在从电视机到电子游戏机上的应用,SETI@home的附属成果正在接踵而至。行星协会的成员真正地帮助开拓了科学方面的新技术。我们最初的投资得到了令人意想不到的成果,并且将会在接下来的多年里持续带来回报。

计算的困境

科学家们在电脑上开展复杂的研究项目来帮助他们处理由现代设备收集到的繁杂数据。现有的计算机技术存在很多限制:庞大而快速的计算机非常昂贵,并且现有的少数超级计算机上的运行时间非常宝贵。研究团队争夺每台机器上的可得到的宝贵时间。与此同时,一些最复杂的问题所包含的计算过于复杂,以至于它们需要的不是几小时或几天的计算时间,而是需要几年甚至是几十年的时间去解决。如果科学本身要去充分利用SETI带来的计算革命,那么就需要一种截然不同的途径。

一种解决方法在意想不到的形式中形成了:那就是互联网。在上世纪90年代,办公室和家中的数百万台电脑通过万维网的神奇力量合而为一。突然间,凭借着鼠标的点击,用户就可以立即跨过国界、大洲以及大洋相互通讯。那么这个突然被联系起来的世界有可能让计算机们加入一个以科学为目标的工作中吗?

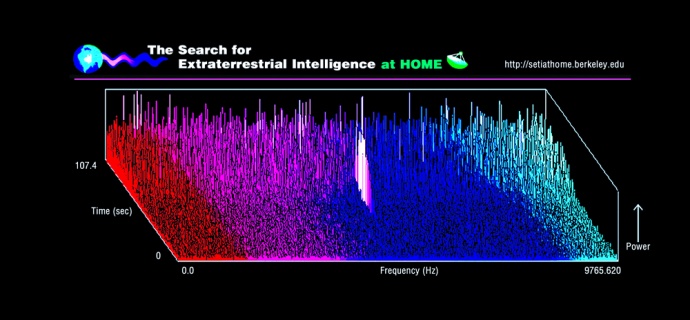

1995年,在加州大学伯克利分校内,一群科学家决定去发现这个解决方法。这个想法是由计算机科学家David Anderson和David Gedye,以及SETI的科学家Dan Werthimer共同孕育的,他们都非常有想象力。大多数的个人电脑仅仅利用了其计算能力的很小一部分,而且大多数时间都在运行屏保程序。如果这些被浪费的CPU与内存资源能够被用来处理来自SETI的繁杂数据,那么现有最强大的超级计算机也会在它的面前相形见绌。

志愿计算的诞生

这个想法非常杰出,但不是所有的投资者都愿意出钱来促使其发展。Anderson与Werthimer曾经在启动资金上打过赌,但是除了一些实物捐赠,再没有任何有远见的赞助者站出来——直到他们呼叫了行星协会。使用着卡尔·萨根未来基金与派拉蒙影业的捐款,我们终于凑齐了让该项目上路所需的第一笔100,000美元。

SETI@home由此诞生。其发起于1999年,它引起了一场国际性轰动。没有几个月,数百万台个人电脑展现了SETI@home的活力。该项目启动的巨大成功让那些即使是SETI@home中最为乐观的发起者都从未想象到。SETI@home的用户让那些最为敏感的搜寻外星智能生命项目成为可能;他们同样证明了志愿计算的力量与潜力。SETI@home成为迄今为止最大且能力最强的计算机网络,它在数月之内完成了一般需要数十年时间完成的计算。

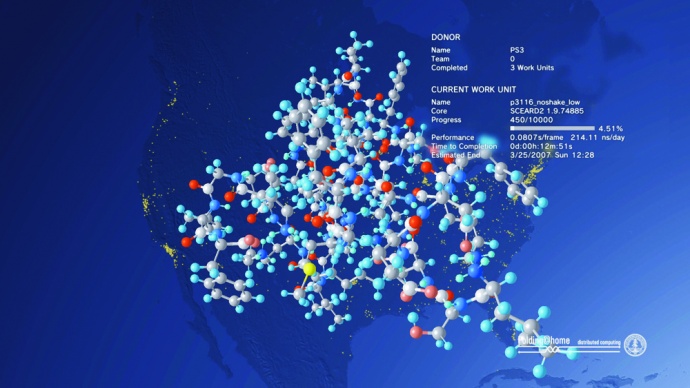

其他领域的科学家很快地学习并且寻找利用这些非凡资源的途径。斯坦福大学的一个小组尝试着去解开蛋白质折叠的奥秘,且他们的课题对于志愿计算来说非常适合。蛋白质是氨基酸构成的长链——它们是生命的基石。为了执行它们的功能,蛋白质不能像绳子或项链那样简单,而是需要折叠成特定且复杂的形态。生命最让人惊讶的谜题之一就是蛋白质执行任务时是那么地可靠,高效而且迅速。在原子尺度下给这一过程建模被证明是计算生物学中最为艰巨的挑战之一。该问题的解决不仅能帮助科学家们更好地理解生命的过程,还能够帮助抗击一些对人类来说最具威胁性的疾病——帕金森症,阿兹海默症,疯牛病以及某些类型的癌症。

斯坦福大学的Vijay S. Pande解释说模拟蛋白质折叠的最主要的困难在于时间。蛋白质折叠在微秒级的尺度下进行(百万分之一秒),但那需要一台普通的计算机花费大约一天的时间去模拟仅仅一纳秒(即十亿分之一秒)的折叠过程。以那样的速度,那将会用去大概三年的时间去模拟一微秒的蛋白质折叠,也可能花费一二十年的计算时间去分析单个蛋白质的折叠。对于这个问题来说这几乎是个行不通的方法。

之后SETI@home的到来引起了Pande和他同事们的注意。一年之内,SETI@home累计运行了不是一二十年而是数百万年的运算时间。这种计算能力将会使模拟蛋白质折叠的研究取得长足进步。在花费了一年时间设计他们自己的志愿计算平台之后,斯坦福大学的那个研究小组以让世人瞩目的成果发起了Folding@home。在两年之内,该项目的首个科研成果就发表在了《自然》杂志上。尽管前面还有很长的治疗顽症的道路要走,但是正如所写的那样,这个项目已经在权威的科学杂志上发表了49篇科学界认可的论文。

Folding@home将不同信息空间的用户与志愿者的个人电脑连接起来。在3月的晚些时候,使用索尼PS3游戏机的玩家通过在他们的设备上运行Folding@home而被给予了将娱乐和科研相结合的机会。这个程序发布后,在不到两天的时间里就有超过100,000的用户下载了Folding@home的运算程序,有将近35,000名用户在任何给定的时间内持续运行该程序。游戏机核心处的强大处理器被设计成急速计算的机器,比普通的个人电脑要快10~50倍。因此,尽管PS3游戏机仅占所有运行Folding@home的机器的五分之一,但它们占据了该项目计算能力的三分之二。

伯克利分布式计算平台(BOINC)的诞生

尽管SETI@home与Folding@home都取得了极大成功,并且吸引了来自世界各地成千上万的人及其设备,但是这两个项目仍然暴露了志愿计算这一理念的限制。每一个研究团队各自独立工作,必须从草拟,编写及测试自己的软件为开始,从而来设计自己的项目,并且得购买和维护自己的服务器。这对于计算机科学家来说都是一个挑战,暂且不说那些生物,物理及医药领域对于设计并操作电脑网络这方面知之甚少的科学家。尽管加州大学伯克利分校和斯坦福大学的研究小组在设计和维护他们那受人敬仰的项目上取得了成功,且他们也成功地进行了志愿计算的管理,但不会有很多科学家走上这条道路。

David Anderson是SETI@home项目的主任,他认为自己有一个解决方法。如果志愿计算能够被做得简单且方便,那么将会发生什么?如果其能够实现,那么很多处境艰难的研究小组就可以从其非凡的潜力中获益。有了心中的这一想法以及实施SETI@home的经验,Anderson开发出了伯克利分布式计算平台,即广为人知的BOINC。

不同于SETI@home和Folding@home,BOINC其自身并不是一个志愿计算研究项目,而是一种任何想要发起研究项目的人都能够方便操作的电脑编码。凭借其相对较小的修改量,BOINC的代码几乎可以被所有领域的项目使用。

为BOINC的发起引路的项目正是SETI@home。在2004年6月,用户们开始下载BOINC上的版本,它比原先的版本更为强大和灵活。在那一年结束时,将SETI@home移植到BOINC上的工作彻底完成,原先的“classic"版本也彻底弃用。

SETI@home’s conversion was an important milestone because BOINC allows PC users to run more than one project easily on their machines. Any volunteer can, for example, decide to run SETI@home 70 percent of the time and a biology project the other 30 percent. As a result, SETI@home’s legions of users are available for other BOINC projects that are just starting out.

Soon after, numerous other projects launched their own BOINC programs. Among them was predictor@home, run from the Scripps Research Institute in San Diego. Like folding@home, it investigates protein folding, but whereas the Stanford project attempts to determine the sequence of foldings over time, predictor@home focuses on the internal architecture of the folded protein. Two other BOINC projects use distributed computing to decipher the structure of proteins: Rosetta@home, out of the University of Washington, and Proteins@home, based at the Ecole Polytechnique in France.

Altogether, according to David Anderson, 40 different projects have now joined the BOINC family and use its brand of volunteer computing. Primegrid.com is a privately run mathematical project that searches for very large prime numbers and has already found more than 100 new primes. Einstein@home is based at the University of Wisconsin in Milwaukee and searches for pulsars in the sky based on data from the gravitational wave detectors LIGO and GEO. LHC@home simulates the Large Hadron Collider, a particle accelerator being built at the CERN facility in Geneva, the largest particle physics laboratory in the world. By simulating particles traveling through the accelerator, LHC@home helps with the extremely precise design required for the LHC.

The BBC Comes on Board

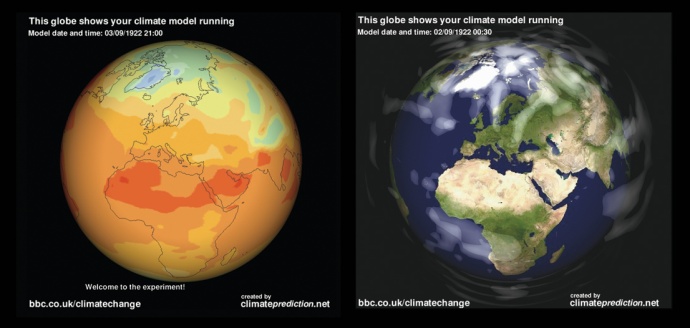

The most popular and high-profile project, except for SETI@home itself, is climateprediction.net, a BOINC project based at Oxford University and the Open University in the United Kingdom. As its name indicates, climateprediction.net investigates one of today’s most pressing concerns for both science and public policy: Earth’s future climate.

“It all began,” explained Co-Principal Investigator Bob Spicer of the Open University, “in the late 1990s when Myles Allen of Oxford noticed the SETI@home screen-saver on a colleague’s computer.” After the concept was explained to him, he began to wonder, “Would it be possible to model the Earth’s climate in this way?”

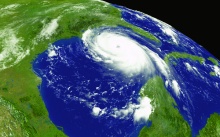

Although the jury is still out on whether climate change is responsible for the increase inthe number and ferocity of hurricanes, we definitely need improved methods of climate prediction to better prepare for these devastating storms. Credit: National Oceanic and Atmospheric Administration

It wasn’t easy. Climate models are extremely complex, dividing Earth’s surface into small square regions, then dividing these in turn into separate layers of the atmosphere. The model operates over time, taking into account such factors as the increasing effect of human-generated greenhouse gases that can heat up Earth and sulfur that cools the planet by blocking sunlight.

Then there’s the effect of the oceans, which account for around 50 percent of any climate change. To further complicate things, the atmosphere and the oceans operate on different time scales: the atmosphere can respond to climate change factors in a matter of days, but the oceans can take centuries to change their patterns. All this makes for a very challenging computational exercise requiring the most advanced and fastest computational resources available.

In September 2003, Allen, Spicer, and their colleagues launched climateprediction.net. The first version was simplified and did not account for the oceans. It took on the easier problem of determining what effect a doubling of the amount of carbon dioxide (CO2) in the atmosphere would have on Earth’s climate. Even simplified, climateprediction.net was already doing better than competing models: by January 2005, when the first article appeared in Nature, climateprediction.net had run 2,570 simulations of Earth’s climate, compared with only 127 by the supercomputer at the Met Office, the British government agency responsible for monitoring weather and climate.

In the second stage of the project, Oxford and the Open University were joined by a surprising new partner: the British Broadcasting Corporation (BBC). Eager to engage the public in the debate over climate change, the BBC was planning a series of documentaries on global warming and its effect, due to air in 2006. It offered to make climateprediction.net an integral part of its plans, promote it in its documentaries, and invite the public to take part. It was an offer that Allen, Spicer, and their colleagues could not pass up.

The new version of climateprediction.net, also known as “the BBC experiment,” was far more complex than the earlier one. A realistic ocean was now an integral part of the model, and rather than compare distinct states (current levels of CO2 vs. double those levels), the program followed the evolution of the climate by tracking the contributing factors. Unlike the early version, the new climateprediction.net was a member of the BOINC family.

The BBC, meanwhile, did its part. To inaugurate the project in February 2006, it aired an hour-long documentary, titled Meltdown, on climate change. The documentary invited people to take part in the BBC experiment, and the project was an overnight hit. Within 10 days of the airing of Meltdown, 100,000 people in 143 countries had downloaded the software and were running climateprediction.net on their computers. Within a month, that number had doubled.

According to Spicer, climateprediction.net demands far more of a computer than does SETI@home. A typical PC can process a SETI@home work unit in a few days, but completing a single climateprediction.net simulation could take months. Nevertheless, by the end of 2006, more than 50,000 simulations had been completed and sent back to the project’s headquarters. To mark the completion of the BBC experiment, the network aired another documentary, titled Climate Change: Britain Under Threat, hosted by respected British broadcaster David Attenborough.

Although the BBC’s involvement has ended for now, climateprediction.net is still going strong. Its ultimate goal is to run several million simulations to fully explore the effects of all 23 parameters included in the model. “This is genuine science that cannot be done any other way,” said Spicer. “It uses a state-of-the-art model, and it feeds into an ongoing public debate.”

The Future

SETI@home and climateprediction.net offer glimpses of the power and potential of volunteer computing. This technique is providing projects with enormous computing resources and connects science with the public in ways never before possible. Projects such as climateprediction.net, said Bob Spicer, “give members of the public a sense of ownership of a genuine scientific project, in which they fully participated.” Volunteer computing is what makes it all possible.

Anderson is still looking for ways to improve volunteer computing, including expansion into the computer gaming world. Although he considers this a promising direction, most projects are not as compatible with game consoles as is folding@home. For example, climateprediction.net will never run on a PlayStation 3, Anderson explained, because it requires too much memory. Projects like SETI@home probably can run on a game console, though the improvement over conventional computers will likely not be as spectacular is it was for folding@home. Nevertheless, Anderson and his team are in discussions with Sony about launching a PlayStation 3 version of BOINC.

BOINC boasts 40 different distributed computing projects, but Anderson is far from satisfied. He has estimated that “ninety-nine percent of scientists who could profit from volunteer computing are only dimly aware of BOINC’s existence.” The reasons, he suggested, are not so different from those that prompted BOINC in the first place: scientists in other fields are rarely knowledgeable about computer science, and their IT (information technology) experts often want to retain control of a project, which is not always possible with this new approach. As a result, volunteer computing is rarely considered by researchers.

To overcome these barriers, Anderson is proposing what he calls “virtual campus supercomputing centers” in universities. They would be university-wide volunteer computing centers that would offer hosting services and technical advice to any research group in search of computing resources. The centers would seek out researchers who could make use of their services. A university could appeal to its alumni and ask them to contribute time on their computers to benefit the virtual computing center. Graduates eager to remain part of their alma mater’s community and contribute to its scientific prowess would be happy to oblige.

Anderson takes heart from the success of the “World Community Grid”—an IBM-run program that hosts and runs volunteer computing operations for selected scientific projects. In Anderson’s vision, the virtual computing supercomputer center will do much the same but on a grander scale. In the future, he hopes, each university campus will have its own center. Somewhere down the line, Anderson believes, a tipping point will be reached, and distributed computing projects will become so common that they will always be considered as a viable option for complex and time-consuming calculations. Then the volunteer computing revolution will be complete.

What of SETI@home, the granddaddy of them all? Eight years after its launch and three years after its conversion to BOINC, the project is still going strong. With hundreds of thousands of users, it accounts for about half of BOINC volunteers. Nowadays, explains Chief Scientist Dan Werthimer, thanks to a new multibeam receiver at Arecibo Observatory and the project’s increased computing power, SETI@home is more powerful and more sensitive than ever before.

As ever more projects follow the path it blazed for volunteer computing and public participation in science, SETI@home continues patiently in its course, crunching data and seeking that signal from outer space. Somewhere in the vast globe-spanning SETI@home network, the elusive sign from E.T. could still be waiting to be discovered.

Was our investment worth it? How can anyone say no? SETI@home and its spin-offs demonstrate The Planetary Society’s faith in the future and our belief that by pursuing discovery and understanding of the universe, we can make this small world of ours a better one. Be proud that you helped make it happen.