Searching for E.T. and the Cure for Cancer: The Planetary Society Helps Trigger a Computing Revolution

Searching for E.T. and the Cure for Cancer:

The Planetary Society Helps Trigger a Computing Revolution

来自The Planetary Report,2007年5月/6月

作者:Amir Alexander 和 Charlene Anderson

<资料来源:The Planetary Society>

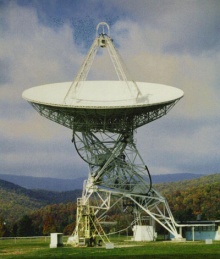

Shown here is the The 26-meter (85-foot) radio telescope at Green Bank, West Virginia, used in the first SETI experiment -- Project Ozma. Credit: NRAO / AUI

We couldn’t say no to the opportunity: being part of an experiment in which members of the public could truly contribute to science and have a chance to make a world-changing discovery. That’s what SETI@home promised when David Anderson and Dan Werthimer of UC Berkeley brought the project to The Planetary Society and asked for our help in getting it launched. With our members’ support, we leaped on it, and nearly six million participants later, SETI@home is a landmark in the history of scientific computing.

A prime reason we supported SETI@home was the project’s potential to advance the Search for Extraterrestrial Intelligence (SETI), an endeavor intimately connected to The Planetary Society since our founding. But there was more to it than that. SETI@home would also pioneer a new mode of computing, in which packets of data would be distributed among a network of personal computers, creating a virtual supercomputer that could dramatically decrease the money and time scientists spend on knotty calculations.

The potential for “spin-offs”—applications that serendipitously follow original research—was obvious from SETI@home’s birth, but moving from potential to reality is never guaranteed. One can only hope. In the case of SETI@home, that hope has been realized spectacularly.

In applications ranging from British television to video game consoles, SETI@home spin-offs just keep coming. Planetary Society members truly have helped pioneer new techniques in the conduct of science. Our initial investment has returned amazing results that will continue to deliver benefits over years to come.

A Computing Quandary

Scientists conducting complex research projects depend on computers to help them process the masses of data collected by modern instruments. Existing computer technology has constraints: large and fast computers are expensive, and processing time on the few existing supercomputers is scarce. Research groups vie for the precious time available on each machine. Furthermore, some of the most intriguing scientific riddles involve calculations so elaborate and complex that they require not hours or days but years or even decades of computing time to resolve. If science was to make full use of the computing revolution in SETI research, a different approach would be needed.

A solution arrived in unexpected form: the Internet. In the 1990s, millions of computers, isolated in offices and homes, became linked to one another through the magic of the World Wide Web. Suddenly, with the click of a mouse, users could instantaneously communicate across borders, continents, and oceans. Could this suddenly interconnected world make it possible for computers to join in pursuit of a scientific goal?

In 1995, in Berkeley, California, a group of scientists decided to find out. The idea, hatched by computer scientists David Anderson and David Gedye, along with SETI scientist Dan Werthimer, was brilliant in its simplicity. Most personal computers use only a fraction of their computing capacity, spending much of their time running screensavers. If those wasted processing CPUs and megabytes of computer memory could be harnessed to process the mass of data collected in the search for extraterrestrial intelligence, the resulting network would dwarf the computing power of the fastest supercomputer in existence.

The Birth of Volunteer Computing

The idea was brilliant, but not one for which investors were willing to give money to develop. Anderson and Werthimer beat the bushes looking for startup funds, but aside from a few in-kind donations, no visionary sponsor stepped forward—until they called The Planetary Society. Using the Carl Sagan Fund for the Future and a donation from Paramount Pictures, we provided the first $100,000 needed to get the project under way.

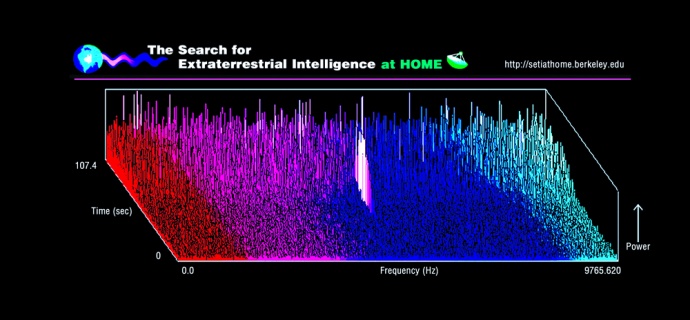

And so SETI@home was born. Launched in 1999, it became an international sensation. Within a few months, millions of personal computers were displaying the dynamic power bar graphics that have become the iconic image of SETI@home. It was a startling success on a scale that even the most optimistic of SETI@home’s founders never imagined. SETI@home users made possible the most sensitive search for extraterrestrial intelligence ever conducted; they also demonstrated the power and potential of volunteer computing. SETI@home became—by far—the largest and most powerful computer network ever assembled, accomplishing within months calculations that normally would have taken decades.

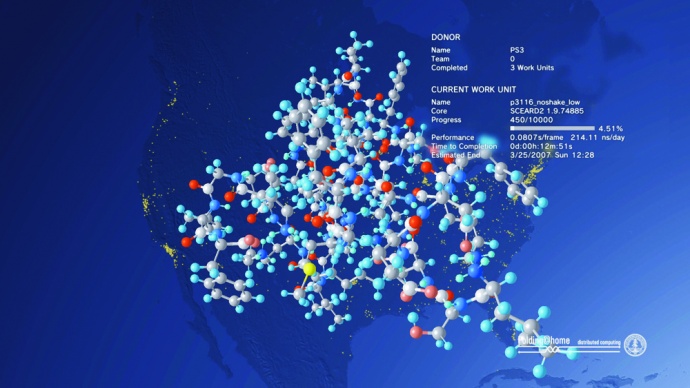

Scientists in other fields quickly took note and searched for ways to take advantage of the remarkable resource. A Stanford University group trying to decipher the mysteries of protein folding thought their project was ideally suited for volunteer computing. Proteins are long strings of amino acids—the building blocks of life. To fulfill their functions, proteins cannot remain as simple strings, or “necklaces,” but need to fold into specific and complex shapes. One of the most amazing mysteries of life is that proteins perform that task reliably, efficiently, and quickly. Modeling this process on the atomic scale proved to be one of the most difficult challenges of computational biology. Resolving it not only would help scientists better understand the processes of life but also could help fight some of the most crippling diseases afflicting humanity—Parkinson’s, Alzheimer’s, BSE (“mad cow disease”), and certain types of cancer.

The chief difficulty in simulating protein folding is time, explained Vijay S. Pande of Stanford University. Proteins fold on a time scale of microseconds (millionths of a second), but it takes an average computer about a day just to simulate the folding over a single nanosecond (one billionth of a second). At that rate, it would take almost three years to simulate a microsecond of folding and perhaps a decade or two of computer time to analyze the folding of a single protein. This is hardly a practical way to resolve the problem.

Then came SETI@home, and Pande and his colleagues took notice. Within a year, SETI@home had logged not a decade or two but millions of years of computer time. This kind of computing power would go far toward solving the difficulties in simulating protein folding. After a year designing their own volunteer computing platform, the Stanford group launched folding@home with spectacular results. Within two years, the project’s first scientific publication appeared in Nature. Although much of the long road to curing disease still lies ahead, as of this writing, the project has resulted in the publication of 49 peer-reviewed articles in established scientific journals.

Folding@home has now reached beyond the community of PC users to volunteers in other regions of cyberspace. In late March, computer gamers using Sony’s PlayStation 3 were given the chance to combine entertainment with scientific research by running folding@home on their machines. More than 100,000 users downloaded the folding@home software within two days after it became available, with around 35,000 participating at any given time. The powerful processors at the heart of the game consoles are designed to conduct extremely fast calculations, 10 to 50 times faster than an ordinary personal computer. Thus, although PlayStation 3 consoles account for only one fifth of machines running folding@home, they account for two thirds of the project’s computing power.

The Birth of BOINC

Although both SETI@home and folding@home are highly successful and engage many thousands of people and machines around the world, the projects also exposed the limitations of the volunteer computing concept. Each research group, working separately, had to design its own project from scratch, write and test its own software, and purchase and maintain its own servers. This is a challenge even for computer scientists, not to mention for scientists in fields such as biology, physics, or medicine, who might have little knowledge of how to design and operate a computer network. Although both the Berkeley and the Stanford group succeeded in designing and maintaining their respective projects, as long as conducting a volunteer computing experiment was in itself a major feat of engineering, not many scientists would follow this road.

David Anderson, project director of SETI@home, thought he had a solution. What if volunteer computing was made easy and user-friendly? Then many reluctant research groups could take advantage of its remarkable potential. With this idea in mind, and with the experience of operating SETI@home, Anderson founded the Berkeley Online Infrastructure for Network Computing, known by its catchy acronym, BOINC.

Unlike SETI@home or folding@ home, BOINC is not in itself a volunteer computing research project. It is, rather, an easy-to-use computer code available to anyone who wishes to launch such a project. With relatively minor modifications, the BOINC code can be used for projects in almost any field.

The project to lead the way in launching BOINC was SETI@home. In June 2004, users began downloading the BOINC version, which is more powerful and flexible than the original project. By the end of the year, SETI@home’s transition to BOINC was complete, and the project’s “classic” version shut down.

SETI@home’s conversion was an important milestone because BOINC allows PC users to run more than one project easily on their machines. Any volunteer can, for example, decide to run SETI@home 70 percent of the time and a biology project the other 30 percent. As a result, SETI@home’s legions of users are available for other BOINC projects that are just starting out.

Soon after, numerous other projects launched their own BOINC programs. Among them was predictor@home, run from the Scripps Research Institute in San Diego. Like folding@home, it investigates protein folding, but whereas the Stanford project attempts to determine the sequence of foldings over time, predictor@home focuses on the internal architecture of the folded protein. Two other BOINC projects use distributed computing to decipher the structure of proteins: Rosetta@home, out of the University of Washington, and Proteins@home, based at the Ecole Polytechnique in France.

Altogether, according to David Anderson, 40 different projects have now joined the BOINC family and use its brand of volunteer computing. Primegrid.com is a privately run mathematical project that searches for very large prime numbers and has already found more than 100 new primes. Einstein@home is based at the University of Wisconsin in Milwaukee and searches for pulsars in the sky based on data from the gravitational wave detectors LIGO and GEO. LHC@home simulates the Large Hadron Collider, a particle accelerator being built at the CERN facility in Geneva, the largest particle physics laboratory in the world. By simulating particles traveling through the accelerator, LHC@home helps with the extremely precise design required for the LHC.

The BBC Comes on Board

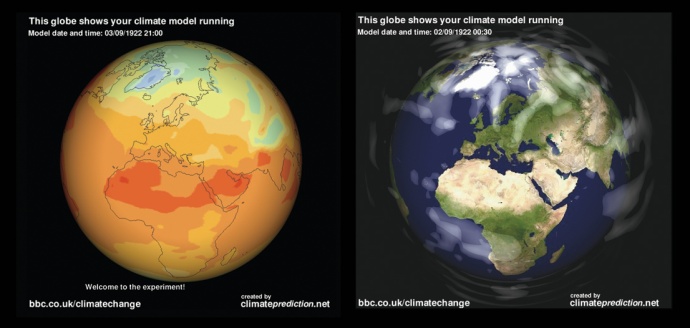

The most popular and high-profile project, except for SETI@home itself, is climateprediction.net, a BOINC project based at Oxford University and the Open University in the United Kingdom. As its name indicates, climateprediction.net investigates one of today’s most pressing concerns for both science and public policy: Earth’s future climate.

“It all began,” explained Co-Principal Investigator Bob Spicer of the Open University, “in the late 1990s when Myles Allen of Oxford noticed the SETI@home screen-saver on a colleague’s computer.” After the concept was explained to him, he began to wonder, “Would it be possible to model the Earth’s climate in this way?”

Although the jury is still out on whether climate change is responsible for the increase inthe number and ferocity of hurricanes, we definitely need improved methods of climate prediction to better prepare for these devastating storms. Credit: National Oceanic and Atmospheric Administration

It wasn’t easy. Climate models are extremely complex, dividing Earth’s surface into small square regions, then dividing these in turn into separate layers of the atmosphere. The model operates over time, taking into account such factors as the increasing effect of human-generated greenhouse gases that can heat up Earth and sulfur that cools the planet by blocking sunlight.

Then there’s the effect of the oceans, which account for around 50 percent of any climate change. To further complicate things, the atmosphere and the oceans operate on different time scales: the atmosphere can respond to climate change factors in a matter of days, but the oceans can take centuries to change their patterns. All this makes for a very challenging computational exercise requiring the most advanced and fastest computational resources available.

In September 2003, Allen, Spicer, and their colleagues launched climateprediction.net. The first version was simplified and did not account for the oceans. It took on the easier problem of determining what effect a doubling of the amount of carbon dioxide (CO2) in the atmosphere would have on Earth’s climate. Even simplified, climateprediction.net was already doing better than competing models: by January 2005, when the first article appeared in Nature, climateprediction.net had run 2,570 simulations of Earth’s climate, compared with only 127 by the supercomputer at the Met Office, the British government agency responsible for monitoring weather and climate.

In the second stage of the project, Oxford and the Open University were joined by a surprising new partner: the British Broadcasting Corporation (BBC). Eager to engage the public in the debate over climate change, the BBC was planning a series of documentaries on global warming and its effect, due to air in 2006. It offered to make climateprediction.net an integral part of its plans, promote it in its documentaries, and invite the public to take part. It was an offer that Allen, Spicer, and their colleagues could not pass up.

The new version of climateprediction.net, also known as “the BBC experiment,” was far more complex than the earlier one. A realistic ocean was now an integral part of the model, and rather than compare distinct states (current levels of CO2 vs. double those levels), the program followed the evolution of the climate by tracking the contributing factors. Unlike the early version, the new climateprediction.net was a member of the BOINC family.

The BBC, meanwhile, did its part. To inaugurate the project in February 2006, it aired an hour-long documentary, titled Meltdown, on climate change. The documentary invited people to take part in the BBC experiment, and the project was an overnight hit. Within 10 days of the airing of Meltdown, 100,000 people in 143 countries had downloaded the software and were running climateprediction.net on their computers. Within a month, that number had doubled.

According to Spicer, climateprediction.net demands far more of a computer than does SETI@home. A typical PC can process a SETI@home work unit in a few days, but completing a single climateprediction.net simulation could take months. Nevertheless, by the end of 2006, more than 50,000 simulations had been completed and sent back to the project’s headquarters. To mark the completion of the BBC experiment, the network aired another documentary, titled Climate Change: Britain Under Threat, hosted by respected British broadcaster David Attenborough.

Although the BBC’s involvement has ended for now, climateprediction.net is still going strong. Its ultimate goal is to run several million simulations to fully explore the effects of all 23 parameters included in the model. “This is genuine science that cannot be done any other way,” said Spicer. “It uses a state-of-the-art model, and it feeds into an ongoing public debate.”

The Future

SETI@home and climateprediction.net offer glimpses of the power and potential of volunteer computing. This technique is providing projects with enormous computing resources and connects science with the public in ways never before possible. Projects such as climateprediction.net, said Bob Spicer, “give members of the public a sense of ownership of a genuine scientific project, in which they fully participated.” Volunteer computing is what makes it all possible.

Anderson is still looking for ways to improve volunteer computing, including expansion into the computer gaming world. Although he considers this a promising direction, most projects are not as compatible with game consoles as is folding@home. For example, climateprediction.net will never run on a PlayStation 3, Anderson explained, because it requires too much memory. Projects like SETI@home probably can run on a game console, though the improvement over conventional computers will likely not be as spectacular is it was for folding@home. Nevertheless, Anderson and his team are in discussions with Sony about launching a PlayStation 3 version of BOINC.

BOINC boasts 40 different distributed computing projects, but Anderson is far from satisfied. He has estimated that “ninety-nine percent of scientists who could profit from volunteer computing are only dimly aware of BOINC’s existence.” The reasons, he suggested, are not so different from those that prompted BOINC in the first place: scientists in other fields are rarely knowledgeable about computer science, and their IT (information technology) experts often want to retain control of a project, which is not always possible with this new approach. As a result, volunteer computing is rarely considered by researchers.

To overcome these barriers, Anderson is proposing what he calls “virtual campus supercomputing centers” in universities. They would be university-wide volunteer computing centers that would offer hosting services and technical advice to any research group in search of computing resources. The centers would seek out researchers who could make use of their services. A university could appeal to its alumni and ask them to contribute time on their computers to benefit the virtual computing center. Graduates eager to remain part of their alma mater’s community and contribute to its scientific prowess would be happy to oblige.

Anderson takes heart from the success of the “World Community Grid”—an IBM-run program that hosts and runs volunteer computing operations for selected scientific projects. In Anderson’s vision, the virtual computing supercomputer center will do much the same but on a grander scale. In the future, he hopes, each university campus will have its own center. Somewhere down the line, Anderson believes, a tipping point will be reached, and distributed computing projects will become so common that they will always be considered as a viable option for complex and time-consuming calculations. Then the volunteer computing revolution will be complete.

What of SETI@home, the granddaddy of them all? Eight years after its launch and three years after its conversion to BOINC, the project is still going strong. With hundreds of thousands of users, it accounts for about half of BOINC volunteers. Nowadays, explains Chief Scientist Dan Werthimer, thanks to a new multibeam receiver at Arecibo Observatory and the project’s increased computing power, SETI@home is more powerful and more sensitive than ever before.

As ever more projects follow the path it blazed for volunteer computing and public participation in science, SETI@home continues patiently in its course, crunching data and seeking that signal from outer space. Somewhere in the vast globe-spanning SETI@home network, the elusive sign from E.T. could still be waiting to be discovered.

Was our investment worth it? How can anyone say no? SETI@home and its spin-offs demonstrate The Planetary Society’s faith in the future and our belief that by pursuing discovery and understanding of the universe, we can make this small world of ours a better one. Be proud that you helped make it happen.